Summary

This lecture introduces metaheuristics and demonstrates how Genetic Algorithms can be applied for hyperparameter optimization in ridge regression.

Metaheuristics

“meta” = beyond, higher level

“heuristic” = rule of thumb, practical method

Metaheuristics are high-level strategies designed to guide lower-level heuristics to explore the solution space effectively. They are all stochastic algorithms that use randomization and global exploration.

- Randomization provides a good way to move away from the local search to the global search. (i.e. escape local optima)

- It is suitable for non-linear modeling and global optimization.

Goal of using metaheuristics is to find a good enough solution in a reasonable time frame, rather than the optimal solution.

Main components of metaheuristics

- Intensification (exploitation)

- The search in a local region by exploiting the information that a current good solution is found in this region.

- It aims to refine and improve the current solution by exploring its neighborhood.

- Basically, selecting the best each time converges to a local optimum.

- Diversification (exploration)

- Generates diverse solutions to explore different regions of the solution space.

- It helps to avoid premature convergence to local optima by introducing randomness and exploring new areas.

- It is important to maintain a balance between intensification and diversification to effectively explore the solution space.

Types of Algorithms

- Population-based

- Maintain and evolve a population of solutions.

- Examples: Genetic Algorithms, Particle Swarm Optimization

- Trajectory-based

- Start from a single solution and iteratively improve it.

- Examples: Simulated Annealing

Genetic Algorithms (GAs)

Genetic Algorithms (GAs) are a type of population-based metaheuristic inspired by the process of natural selection and genetics. They are used to solve optimization and search problems by mimicking the process of evolution.

Definition (Terminology)

There are several key concepts that need to be defined before we can describe how GAs work:

- Chromosome: Represents a potential solution to the problem.

- Gene: A part of a chromosome that represents a specific parameter or feature of the solution.

- Ex: For chromosome “ABCD”, each letter is a gene (A, B, C, D).

- Population: A collection of chromosomes.

- Offspring: New chromosomes created through genetic operations such as crossover and mutation.

- Fitness Function: A function that evaluates how well a chromosome solves the problem.

Advantages

- GA is gradient-free, making it suitable for non-differentiable, discontinuous, or noisy objective functions.

- GA can be easily parallelized, allowing for efficient exploration of the solution space.

- Different parameters and groups of encoded strings can be manipulated independently, providing flexibility in the search process.

Disadvantages

- Formulation of the fitness function, choosing parameters, determining the selection criteria, and population size can be challenging and may require domain knowledge.

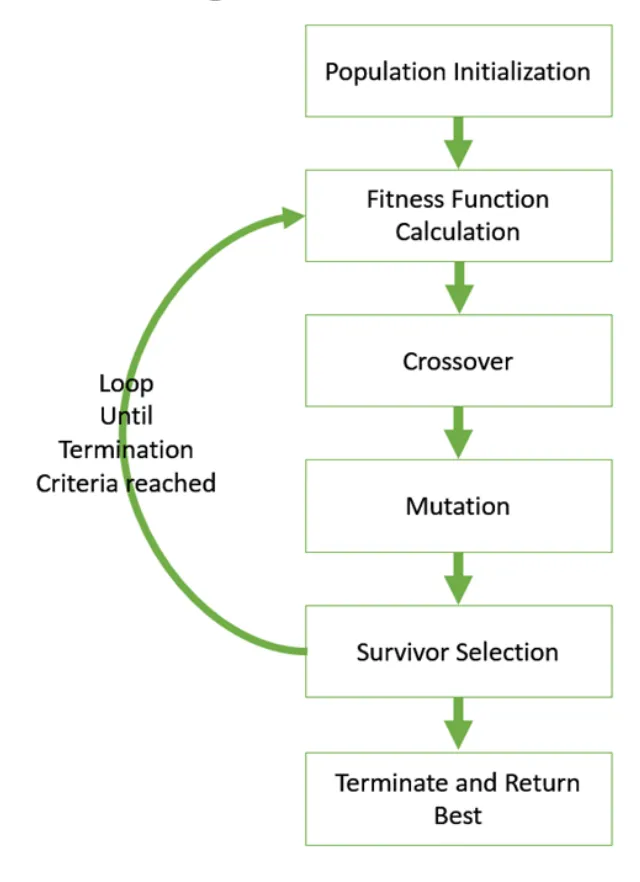

Basic Steps of Genetic Algorithms

- Population Initialization

- Generate chromosomes randomly to form the initial population.

- is a hyperparameter that needs to be set. Once set, it remains constant throughout the algorithm.

- Too small: May not explore the solution space effectively, leading to premature convergence.

- Too large: Increases computational cost and may slow down convergence.

- Fitness Evaluation

- is the objective function to optimize.

- is a chromosome (solution).

- Crossover

- Select pairs of chromosomes (parents) from the current population based on their fitness scores.

- Combine the genes of the parents to create new chromosomes (offspring).

Parent 1: A B C | D E FParent 2: 1 2 3 | 4 5 6Offspring: A B C | 4 5 61 2 3 | D E F- There are many crossover techniques, such as one-point crossover, two-point crossover, and uniform crossover, etc.

- Mutation

- Introduce random changes to the genes of the offspring chromosomes with a low probability (mutation rate).

- This helps to maintain genetic diversity and explore new areas of the solution space. It does not happen to every offspring.

Original: A B C D E FMutated: A B X D E F - Survivor Selection

- Population size must remain constant, so we need to select which chromosomes will survive to the next generation.

- Common strategies include:

- Generational Replacement: Replace the entire population with the new offspring.

- Elitism: Retain a few of the best chromosomes from the current population and fill the rest with new offspring.

- Go back to step 2 and repeat until a stopping criterion is met.

- Termination

- The algorithm stops when a predefined stopping criterion is met, such as reaching a maximum number of generations or achieving a satisfactory fitness level.

Example Hyperparameter Optimization with GAs

Given Problem:

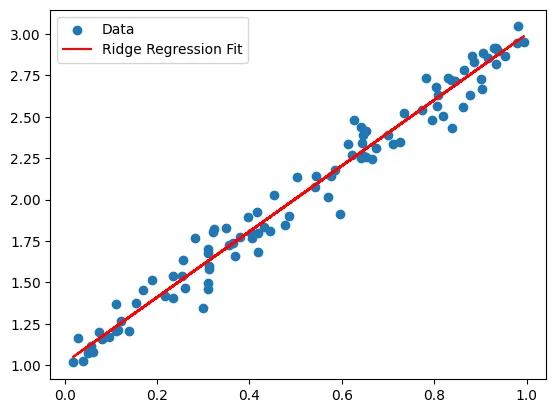

Use the following assumptions to generate the dataset:

- True model:

- : Generate 100 random values.

- : Generate 100 values with the above and add error with a normal distribution.

- e.g.

- Use the same loss function on the upper and lower level.

- Ridge regression loss:

Steps to solve:

- Write lower level optimization problem

- Write Upper Level evaluation

- Genetic Algorithm HPO

- Test

Example (Genetic Algorithm Python Example)

import numpy as npfrom sklearn.linear_model import Ridgefrom sklearn.model_selection import train_test_splitimport matplotlib.pyplot as pltimport random

# Generate Datax = np.random.rand(100)y = 2 * x + 1 + np.random.normal(0, 0.1, 100)

# Split DataX_train, X_val, y_train, y_val = train_test_split(x.reshape(-1, 1), y, test_size=0.2, random_state=42)

# Lower Level Optimizationdef lower_opt(X_train, y_train, lambda_): model = Ridge(alpha=lambda_) model.fit(X_train, y_train)

m = model.coef_[0] b = model.intercept_ return m, b

# Upper Level Evaluationdef upper_eval(X_val, y_val, m, b, lambda_): obj = np.sum((y_val - (m * X_val.flatten() + b)) ** 2) + lambda_ * (m ** 2) return obj

# Genetic Algorithm HPOdef genetic_algorithm_hpo(X_train, y_train, X_val, y_val, pop_size, num_generations, mutation_rate, crossover_rate): # Step 1: Initialize Population population = [random.uniform(0, 1) for _ in range(pop_size)]

# Generation for generation in range(num_generations): # Step 2: Evaluate Fitness fitness_scores = [] for lambda_ in population: m, b = lower_opt(X_train, y_train, lambda_) fitness = upper_eval(X_val, y_val, m, b, lambda_) fitness_scores.append(fitness)

# Step 3: Selection (Tournament Selection) - Select the best half --> exploitation selected_parents = [population[i] for i in np.argsort(fitness_scores)[:pop_size // 2]]

new_population = selected_parents.copy() while len(new_population) < pop_size: # Step 4: Crossover --> exploration if random.random() < crossover_rate: parent1, parent2 = random.sample(selected_parents, 2) child = 0.5 * parent1 + 0.5 * parent2 else: child = random.choice(selected_parents)

# Step 5: Mutation if random.random() < mutation_rate: child += random.uniform(-0.1, 0.1) child = min(max(child, 0.0001), 1)

new_population.append(child)

population = new_population # update population each generation

# Recalculate fitness for final population fitness_scores = [] for lambda_ in population: m, b = lower_opt(X_train, y_train, lambda_) fitness = upper_eval(X_val, y_val, m, b, lambda_) fitness_scores.append(fitness)

best_lambda = population[np.argmin(fitness_scores)] return best_lambda

# Run Genetic Algorithmbest_lambda = genetic_algorithm_hpo(X_train, y_train, X_val, y_val, pop_size=20, num_generations=50, mutation_rate=0.1, crossover_rate=0.7)

# Check Final Modelfinal_model = Ridge(alpha=best_lambda)final_model.fit(X_train, y_train)

# Plot Resultsplt.scatter(x, y, label='Data')plt.plot(x, final_model.coef_ * x + final_model.intercept_, color='red', label='Ridge Regression Fit')plt.legend()plt.show()Results: