Summary (Summary of Optimization in MMM)

Takes around 3 optimization steps

- Variable transformation (adstock, saturation) → requires optimization of 3 hyperparameters.

- Adstock = Captures the delayed effect of advertising over time. How long does the effect of an ad last?

- hyperparameter: decay rate

- Saturation = Accounts for diminishing returns on ad spend. How quickly do additional ad spends yield lower returns?

- hyperparameters: saturation point, saturation rate.

- Adstock = Captures the delayed effect of advertising over time. How long does the effect of an ad last?

- Multi-objective optimization minimizing error between MMM predictions and ground truth.

- Optimal budget allocation.

Model Parameter

- Configuration variable that is internal to the model and whose value can be estimated from data.

- e.g., weights in linear regression, weights and biases in neural networks.

Characteristics

- They are required by the model when making predictions.

- They values define the skill of the model on your problem.

- They are estimated or learned from data.

- They are often not set manually by the practitioner.

- They are often saved as part of the learned model.

Hyperparameter

- Configuration variable that is external to the model and whose value cannot be estimated from data.

- e.g., learning rate, number of hidden layers, number of clusters.

Characteristics

- They are often used in the process to help estimate model parameters.

- They can often be set using heuristics.

- They are often tuned for a given predictive modeling problem.

Bilevel optimization

Bi-level Optimization has a nested structure where one optimization problem is contained within another. It consists of an upper-level problem and a lower-level problem, with the solution of the upper-level problem depending on the solution of the lower-level problem.

- : Hyperparameter. The variable we want to optimize (e.g., learning rate, number of hidden units, regularization strength).

- : The search space where the hyperparameter can exist.

- : Model Parameter. The values that the model learns through training (e.g., weights and biases of a neural network).

- : The dataset, split into

- : Data used to train the model parameters .

- : Data used to (1) evaluate the generalization performance of the trained model and (2) to find the optimal hyperparameter .

- : Training loss function. The criterion used to optimize during training, measuring how well the model predicts .

- : Validation loss function. The criterion used to evaluate the quality of the hyperparameter , measuring how well the model trained on performs on unseen data .

Example

- Select a feasible hyperparameter from the search space . For example, let .

- Solve the lower-level problem to find the optimal model parameter given the hyperparameter .

- solve with respect to .

- The lower-level problem is to minimize the training loss with respect to .

- The optimal solution is .

- Evaluate the upper-level objective function using the obtained .

- The upper-level problem is to minimize the validation loss .

- Substituting and , we get .

Using Grid Search

Definition (What is Discretization?)

Discretization: The process of transforming continuous variables or functions into discrete counterparts.

- Simplifies the search space, making it easier to explore.

- Efficient Search Strategies

- Computational Constraints

- Easier to Parallelize Evaluations.

Grid Search is a method for finding the optimal hyperparameters by exhaustively trying all possible combinations based on a predefined grid of hyperparameter values.

- Most basic hyperparameter optimization method.

- Full factorial design

- Evaluates all possible combinations of hyperparameters.

- Guarantees finding the global optimum if given enough resources.

- curse of dimensionality: Computational cost increases exponentially with the number of hyperparameters.

It is the cartesian product of hyperparameter values.

Using Random Search

When iterations are limited, each trial has the opportunity to explore new values for each hyperparameter, increasing the chances of finding the optimal values for the important parameters.

Random Search is a method for finding the optimal hyperparameters by randomly sampling combinations of hyperparameter values from predefined ranges.

- Works better than Grid Search when some hyperparameters are more important than others. (Which is true in most cases)

- But you don’t know how many samples you need to draw to find a good combination.

Bi-level Black-Box Optimization Problem

Definition (What is Black Box Optimization?)

Black Box: A system or function where the internal workings are not known or accessible. You can only observe the inputs and outputs.

Black-box optimization methods obtain the optimal results without needing to understand the internal mechanics.

- The overall shape of the upper-level objective function is unknown.

- Derivative-free optimization method: gradients and hessians are not needed.

Common Black-Box Optimization Methods

- Bayesian Optimization:

- Builds a probabilistic model of the objective function and uses it to select the most promising hyperparameters to evaluate next.

- This can be considered as a surrogate-based derivative-free optimization algorithm.

- Evolutionary Algorithms:

- Mimics the process of natural selection to evolve a population of candidate solutions. (aka. Genetic Algorithms)

- Random Search:

- Randomly samples hyperparameter combinations from predefined distributions.

- Surrogate-based Derivative-Free Optimization Algorithms:

- Uses a surrogate model to approximate the objective function and guide the search for optimal hyperparameters.

Surrogate-based Derivative-Free Optimization Algorithm in Hyperparameter Optimization

-

Prepare hyperparameters for optimization

- Map the finite set of possible values using sequences of integers.

- Domain denoted as .

-

Initial design

- Create an initial experimental design with samples by random sampling from .

- For each sampled candidate , compute the upper level objective function value () by solving the lower-level problem

- e.g., training and validating a model with hyperparameter .

-

Set

- Record the number of evaluations.

-

Adaptive sampling

- Iteratively select new candidates to evaluate based on previous results.

-

Surrogate model fitting

- Use the data obtained so far to fit a surrogate model.

- This surrogate model is a cheaper approximation of the expensive objective function.

- methods:

- Gaussian Processes (GP): Probabilistic model that provides uncertainty estimates.

- Radial Basis Functions (RBF): Approximate response surface of the objective function.

-

Acquisition function optimization

- Optimize the acquisition function using the surrogate model.

- This optimization process identifies the most promising candidate to evaluate next.

- Exploration vs. Exploitation trade-off:

- Exploration: Searching new areas of the hyperparameter space.

- Exploitation: Focusing on areas known to yield good results.

-

Lower-level problem solving

- Solve the lower-level problem for the newly selected hyperparameter .

- Obtain the objective function value .

-

Update

- Update

- Go back to Step 5.

-

Stopping criterion

- Stop if the termination condition is met.

- e.g.: max number of evaluations, time budget, convergence criteria.

Code Practice

Bilevel Optimization with Random search

import randomimport pyomo.environ as pyoimport numpy as npimport matplotlib.pyplot as plt

random.seed(21)

# lower level problem solve for y given xdef solve_lower_level(x): model = pyo.ConcreteModel() model.y = pyo.Var(within=pyo.NonNegativeReals, bounds=(0, x)) model.obj = pyo.Objective(expr=(model.y - 5)**2, sense=pyo.minimize)

solver = pyo.SolverFactory('ipopt') solver.solve(model) return model.y.value

# evaluate upper level optimization problemdef evaluate_upper_level(x, y): return x**2 + y**2

# test functionsfor i in [0, 5, 10]: print(f"Optimal y for x={i}: {solve_lower_level(i)}")

for i in [0, 5, 10]: print(f"Upper level objective for x={i}, y={solve_lower_level(i)}: {evaluate_upper_level(i, solve_lower_level(i))}")

# iterate randomly to find optimal x, ydef upper_level_random_search(num_iterations=1000): best_x = None best_y = None min_objective_value = float('inf')

for i in range(num_iterations): x_candidate = random.uniform(0, 10) y_candidate = solve_lower_level(x_candidate) objective_value = evaluate_upper_level(x_candidate, y_candidate)

if objective_value < min_objective_value: min_objective_value = objective_value best_x = x_candidate best_y = y_candidate print(f"Iteration {i+1}: New best found -> x = {best_x:.4f}, y = {best_y:.4f}, f(x,y) = {min_objective_value:.4f}")

print("\nOptimal solution:") print(f" x = {best_x:.4f}") print(f" y = {best_y:.4f}") print(f" Minimum f(x, y) = {min_objective_value:.4f}")

return best_x, best_y, min_objective_value

upper_level_random_search()Output:

Iteration 1: New best found -> x = 1.6495, y = 1.6495, f(x,y) = 5.4417Iteration 11: New best found -> x = 0.0318, y = 0.0318, f(x,y) = 0.0020

Optimal solution: x = 0.0318 y = 0.0318 Minimum f(x, y) = 0.0020Visualization

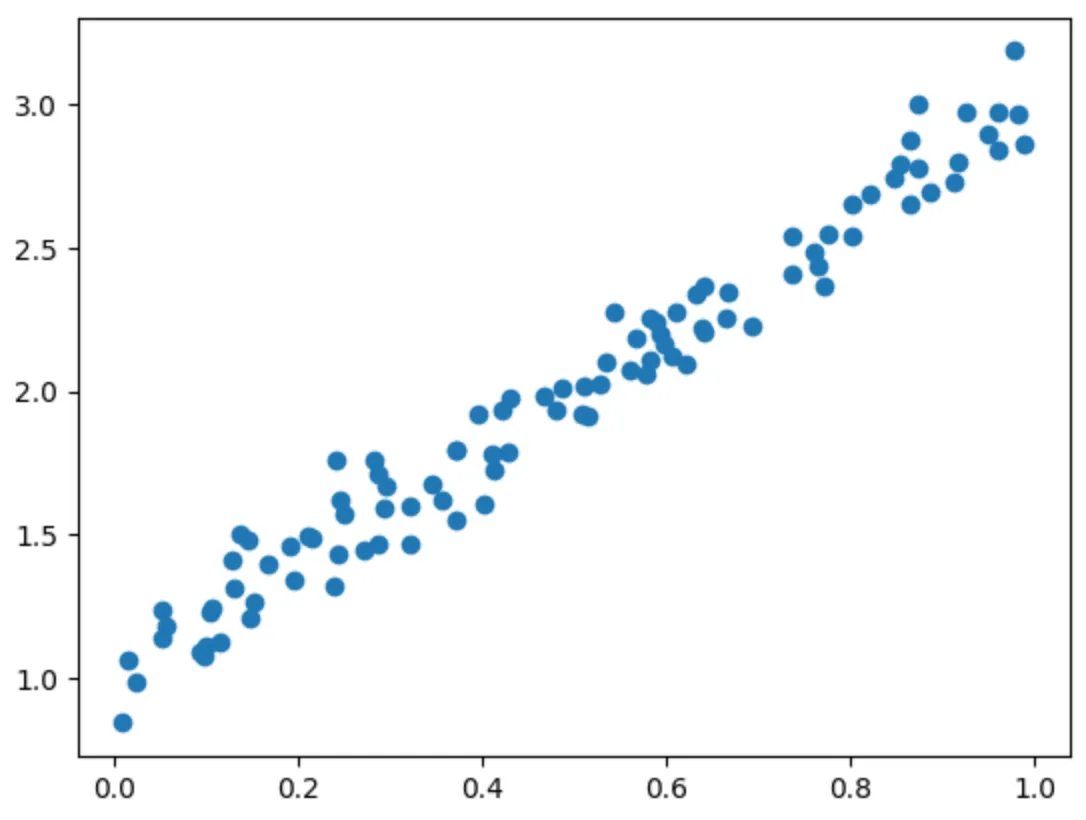

# generate datax = np.random.rand(100)y = 2 * x + 1 + np.random.normal(0, 0.1, 100) # y = 2x + 1 + noiseplt.scatter(x, y)

# split datasplit_index = int(0.8 * len(x))x_train, x_val = x[:split_index], x[split_index:]y_train, y_val = y[:split_index], y[split_index:]Output:

Hyperparameter Optimization with Random Search

Use the following assumptions to generate the dataset:

- True model:

- : Generate 100 random values.

- : Generate 100 values with the above and add error with a normal distribution.

- e.g.

- Use the same loss function on the upper and lower level.

- Ridge regression loss:

def solve_lower_level(lambda_value, x_train, y_train): model = pyo.ConcreteModel() model.m = pyo.Var(within=pyo.Reals) model.b = pyo.Var(within=pyo.Reals)

def obj_func(model): return sum((y_train[i] - (model.m * x_train[i] + model.b))**2 for i in range(len(x_train))) + lambda_value * (model.m**2) model.obj = pyo.Objective(expr=obj_func(model), sense=pyo.minimize)

solver = pyo.SolverFactory('ipopt') solver.solve(model) return model.m.value, model.b.value

solve_lower_level(0.1, x_train, y_train)# Output: (1.9602579300437144, 1.013876477469167)

def upper_level_random_search(num_iterations=1000): best_lambda = None best_m = None best_b = None min_val_loss = float('inf')

for i in range(num_iterations): lambda_candidate = random.uniform(0, 1) m_candidate, b_candidate = solve_lower_level(lambda_candidate, x_train, y_train) val_loss = np.mean([(y_val[j] - (m_candidate * x_val[j] + b_candidate))**2 for j in range(len(x_val))]) + lambda_candidate * (m_candidate**2)

if val_loss < min_val_loss: min_val_loss = val_loss best_lambda = lambda_candidate best_m = m_candidate best_b = b_candidate print(f"Iteration {i+1}: New best -> λ = {best_lambda:.4f}, m = {best_m:.4f}, b = {best_b:.4f}, Val Loss = {min_val_loss:.4f}")

print("\nOptimal solution:") print(f" lambda = {best_lambda:.4f}") print(f" m = {best_m:.4f}") print(f" b = {best_b:.4f}") print(f" Minimum Validation Loss = {min_val_loss:.4f}")

return best_lambda, best_m, best_b, min_val_loss

upper_level_random_search()Output:

Iteration 1: New best -> λ = 0.4744, m = 1.8421, b = 1.0714, Val Loss = 1.6210Iteration 3: New best -> λ = 0.2508, m = 1.9109, b = 1.0379, Val Loss = 0.9258Iteration 7: New best -> λ = 0.2438, m = 1.9131, b = 1.0368, Val Loss = 0.9022Iteration 9: New best -> λ = 0.2171, m = 1.9217, b = 1.0327, Val Loss = 0.8117Iteration 10: New best -> λ = 0.1569, m = 1.9413, b = 1.0231, Val Loss = 0.6010Iteration 20: New best -> λ = 0.1438, m = 1.9456, b = 1.0210, Val Loss = 0.5542Iteration 22: New best -> λ = 0.0404, m = 1.9805, b = 1.0040, Val Loss = 0.1681Iteration 54: New best -> λ = 0.0089, m = 1.9913, b = 0.9987, Val Loss = 0.0452Iteration 119: New best -> λ = 0.0001, m = 1.9944, b = 0.9973, Val Loss = 0.0103

Optimal solution: lambda = 0.0001 m = 1.9944 b = 0.9973 Minimum Validation Loss = 0.0103